Chapter 4 Analysis with synthetic data

In this section we describe how we can use a copy of the synthetic data to help users write DataSHIELD analysis code. Again we will make use of an existing dataset (** TO DO would be better to run this on some harmonised data)

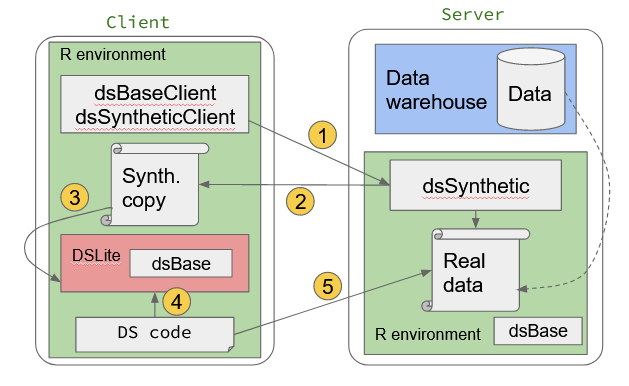

Recall that the objective here is to use a synthetic copy of already harmonised real data that is then made available to the analyst on the client side. This synthetic data set can be loaded into DSLite, a special client side implementation of server side DataSHIELD used for development purposes: the user can have full access to the data. This is acceptable in this situation as the data are synthetic. Therefore the user has the chance to write DataSHIELD code but see the complete results of each step. This makes it easier to develop the code.

The steps are summarised as:

- With the synthetic data on the client side, the user then starts a

DSLiteinstance and places the synthetic data into it.DSLitesimulates the DataSHIELD server side environment on the client side, with full access to the data. - The user can then write their analysis using DataSHIELD commands against the DSLite instance. DSLite then allows the user to return any object on the server side. Therefore users can see the results of each step of their script for each row of data. This helps with debugging.

- When the analysis script works as required, the user can run it against the real data on the server side.

Figure 4.1: Prototyping DataSHIELD analysis using synthetic data on DSLite

4.1 Getting set up

We require DSLite and dsBase to be installed:

## Installing package into '/home/vagrant/R/x86_64-pc-linux-gnu-library/4.0'

## (as 'lib' is unspecified)install.packages("dsBase", repos = c("https://cloud.r-project.org", "https://cran.obiba.org"), dependencies = TRUE)## Installing package into '/home/vagrant/R/x86_64-pc-linux-gnu-library/4.0'

## (as 'lib' is unspecified)Let us assume that the DASIM1 dataset has previously been harmonised and is held on a server. We do not have access to the full dataset but want to do some analysis on it. Using the method described above, the first thing to do is generate a synthetic version that we can fully access. To do this we will use the same steps that were shown in 3

4.2 Create synthetic dataset

We build our log in object

builder <- DSI::newDSLoginBuilder()

builder$append(server="server1", url="https://opal-sandbox.mrc-epid.cam.ac.uk",

user="dsuser", password="P@ssw0rd",

table = "DASIM.DASIM1")

logindata <- builder$build()Then perform the log in to the server:

## Loading required package: opalr## Loading required package: httr## Loading required package: DSI## Loading required package: progress## Loading required package: R6if(exists("connections")){

datashield.logout(conns = connections)

}

connections <- datashield.login(logins=logindata, assign = TRUE)##

## Logging into the collaborating servers##

## No variables have been specified.

## All the variables in the table

## (the whole dataset) will be assigned to R!##

## Assigning table data...The DASIM1 dataset is relatively small (i.e. around 10 columns). This is probably also true of many harmonised data sets. Therefore we can just create a synthetic version of it:

4.3 Start DSLite local instance

library(DSLite)

library(dsBaseClient)

dslite.server <- newDSLiteServer(tables=list(DASIM=DASIM))

dslite.server$config(defaultDSConfiguration(include=c("dsBase", "dsSynthetic")))

builder <- DSI::newDSLoginBuilder()

builder$append(server="server1", url="dslite.server", table = "DASIM", driver = "DSLiteDriver")

logindata <- builder$build()

if(exists("connections")){

datashield.logout(conns = connections)

}

connections <- datashield.login(logins=logindata, assign = TRUE)##

## Logging into the collaborating servers##

## No variables have been specified.

## All the variables in the table

## (the whole dataset) will be assigned to R!##

## Assigning table data...We can now check for ourselves that our DASIM data is in the DSLite server:

## $server1

## $server1$class

## [1] "data.frame"

##

## $server1$`number of rows`

## [1] 10000

##

## $server1$`number of columns`

## [1] 10

##

## $server1$`variables held`

## [1] "LAB_TSC" "LAB_TRIG" "LAB_HDL"

## [4] "LAB_GLUC_FASTING" "PM_BMI_CONTINUOUS" "DIS_CVA"

## [7] "DIS_DIAB" "DIS_AMI" "GENDER"

## [10] "PM_BMI_CATEGORICAL"Now suppose we want to subset the DASIM data into men and women. We can use the ds.subset function:

With DSLite we have the chance to look at actually what happened in detail:

## LAB_TSC LAB_TRIG LAB_HDL LAB_GLUC_FASTING PM_BMI_CONTINUOUS DIS_CVA

## 100 6.589339 3.7035441 1.551391 4.811297 22.87495 0

## 101 5.585602 -0.1089366 1.821371 4.495677 31.37084 0

## 102 6.833856 0.2278742 1.990271 7.288074 22.92131 0

## 103 6.405784 3.3542742 1.427259 4.029337 25.65742 0

## 104 7.211162 1.6012763 2.063004 4.616450 32.20256 0

## 105 3.398039 -0.4639255 1.837249 4.626980 28.94644 0

## DIS_DIAB DIS_AMI GENDER PM_BMI_CATEGORICAL

## 100 0 0 0 1

## 101 0 0 0 3

## 102 0 0 1 1

## 103 0 0 0 2

## 104 0 0 0 3

## 105 0 0 1 2This doesn’t look quite right. There are still rows with GENDER == 0. There is an error in our code (we didn’t specify GENDER as part of the logicalOperator parameter) but didn’t get a warning. Let’s make a correction and try again:

ds.subset(x="D", subset = "women", logicalOperator = "GENDER==", threshold = 1)

# get the data again

women = getDSLiteData(conns = connections, symbol = "women")$server1

head(women)## LAB_TSC LAB_TRIG LAB_HDL LAB_GLUC_FASTING PM_BMI_CONTINUOUS DIS_CVA

## 102 6.833856 0.2278742 1.990271 7.288074 22.92131 0

## 105 3.398039 -0.4639255 1.837249 4.626980 28.94644 0

## 106 5.207432 2.6881104 1.625772 4.236251 21.82132 0

## 112 3.576441 -0.8573527 1.314093 4.153298 20.83983 0

## 114 5.358241 -0.3758645 2.240890 3.804787 20.76025 0

## 115 6.910389 1.3151050 1.845096 3.983279 21.11220 0

## DIS_DIAB DIS_AMI GENDER PM_BMI_CATEGORICAL

## 102 0 0 1 1

## 105 0 0 1 2

## 106 0 0 1 1

## 112 0 0 1 1

## 114 0 0 1 1

## 115 0 0 1 1This now looks much better.

We can also compare results obtained via DataSHIELD with results on the actual data:

from_server = ds.glm(formula = "DIS_DIAB~PM_BMI_CONTINUOUS+LAB_TSC+LAB_HDL", data = "women", family = "binomial")## Warning: glm.fit: algorithm did not converge

## Warning: glm.fit: algorithm did not converge

## Warning: glm.fit: algorithm did not converge

## Warning: glm.fit: algorithm did not converge

## Warning: glm.fit: algorithm did not converge

## Warning: glm.fit: algorithm did not converge

## Warning: glm.fit: algorithm did not converge

## Warning: glm.fit: algorithm did not converge

## Warning: glm.fit: algorithm did not converge

## Warning: glm.fit: algorithm did not convergefrom_local = glm(formula = "DIS_DIAB~PM_BMI_CONTINUOUS+LAB_TSC+LAB_HDL", data = women, family = "binomial")

from_server$coefficients## Estimate Std. Error z-value p-value low0.95CI.LP

## (Intercept) -6.5858636 1.15807792 -5.686892 1.293725e-08 -8.85565463

## PM_BMI_CONTINUOUS 0.1439258 0.02817321 5.108607 3.245427e-07 0.08870737

## LAB_TSC -0.2272564 0.12040068 -1.887501 5.909302e-02 -0.46323737

## LAB_HDL -0.2979682 0.34198044 -0.871302 3.835893e-01 -0.96823757

## high0.95CI.LP P_OR low0.95CI.P_OR high0.95CI.P_OR

## (Intercept) -4.316072621 0.001377834 0.0001425529 0.01317629

## PM_BMI_CONTINUOUS 0.199144311 1.154798466 1.0927608345 1.22035806

## LAB_TSC 0.008724635 0.796716505 0.6292432531 1.00876281

## LAB_HDL 0.372301114 0.742324925 0.3797517347 1.45106985## (Intercept) PM_BMI_CONTINUOUS LAB_TSC LAB_HDL

## -6.5858636 0.1439258 -0.2272564 -0.2979682TO DO make a more realistic example for example with ds.lexis or ds.reshape where it is much harder to know what is going on